Artificial intelligence (AI) disciplines like deep learning have been the talk of the industry for the past several years and only continue to gain steam. The research is in: AI-enabled organizations see a 19 percent average improvement in decision speed and a 21 percent average improvement in decision accuracy. How can you best position your company to capitalize on the benefits? What server technology can differentiate your company as a top competitor?

Enterprise Strategy Group (ESG) considers accelerators one of the three essential compute technologies for AI pursuits. Along with the implementation of automated servers and converged/hyperconverged infrastructure, the widespread use of accelerators was seen by ESG as the key to whether an organization could be considered AI enabled.

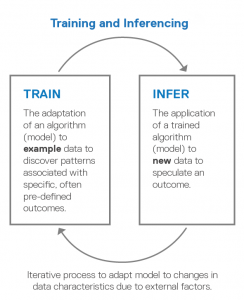

Why the emphasis on accelerators for AI initiatives like deep learning? To understand the logic behind the research firm’s criteria, it helps to comprehend the fundamentals of deep learning. Roughly, it can be broken down into two pursuits: training and inferencing. Training involves teaching your models to learn and develop accuracy. To train a model, you give your computer instructions on how to learn to do something. Inferencing requires running the model on new data in an application. You input the model into code and glean fresh insights. Training and inferencing work in partnership: As your model discovers new data, it must be retrained and refined.

Diagram source: Retail Analytics with Malong RetailAI® on Dell EMC PowerEdge Servers

Diagram source: Retail Analytics with Malong RetailAI® on Dell EMC PowerEdge Servers

To complete the tasks involved in training and inferencing, you need super-charged compute power bolstered by accelerator technology. The two best-known operators in this space are graphics processing units (GPUs) and field-programmable gate arrays (FPGAs). Both rely on parallel processing to reduce processing time, but there are also significant differences between the two choices. GPUs work well for accelerating compute and are best suited for training. They were originally designed to handle the complex math operations needed for graphics. FPGAs have programmable logic blocks that can be optimized to run already-trained models quickly and are ideal for inferencing.

Back to that ESG study: Researchers found that the most AI-enabled organizations are implementing initiatives directly tied to deep learning. Sixty-four percent of such companies are developing, deploying, and tuning AI models in production for natural language processing, while 58 percent are engaged in the same activities for image classification. These organizations accrue major business benefits from their AI pursuits.

AI-enabled organizations are:

- 8x more likely than Stage 1 (the least AI-enabled) orgs to say AI has been very effective at driving value.

- 2x more likely to tie 10 percent or more revenue to AI initiatives.

- 2x more likely to experience a time to value shorter than expectations.

It’s worth your while to invest in top-notch accelerator-enhanced servers for deep learning. The complex and ever-updating neural networks essential to this discipline demand immense processing power, and accelerators are a requirement to participate. To learn more about the platforms and technology that facilitate deep learning, as well as the best Dell EMC PowerEdge servers for the job, download our new eBook, The Server Technology Vital to Deep Learning.

Follow us and join the conversation on Twitter @DellEMCServers.