The Data Accelerator from Dell Technologies breaks through I/O bottlenecks that impede the performance of HPC workloads

In high performance computing, big advances in system architectures are seldom made by a single company working in isolation. To raise the system performance bar to a higher level, it typically takes a collaborative effort among technology companies, system builders and system users. And that’s what it took to develop the Data Accelerator (DAC) from Dell Technologies.

This unique solution to a long-running I/O challenge was developed in a collaborative effort that drew on the expertise of HPC specialists from Dell Technologies, Intel, the University of Cambridge and StackHPC. The resulting solution, DAC, enables the next generation of data‑intensive workflows in HPC systems with an NVMe‑based storage solution that removes storage bottlenecks that slow system performance.

This unique solution to a long-running I/O challenge was developed in a collaborative effort that drew on the expertise of HPC specialists from Dell Technologies, Intel, the University of Cambridge and StackHPC. The resulting solution, DAC, enables the next generation of data‑intensive workflows in HPC systems with an NVMe‑based storage solution that removes storage bottlenecks that slow system performance.

How so? DAC is designed to make optimal use of modern server NVMe fabric technologies to mitigate I/O‑related performance issues. To accelerate system performance, DAC proactively copies data from a cluster’s disk storage subsystem and pre-stages it on fast NVMe storage devices that can feed data to the application at a rate required for top performance. Even better, this unique architecture allows HPC administrators to leave data on cost-effective disk storage until it is required by an application, at which point the data is cached on the DAC nodes.

Plunge Frozen

Cryogenic Electron Microscopy (Cryo-EM) with Relion is one of the key applications for analyzing and processing these large data sets. Greater resolution brings challenges — as the volume of data ingest from such instruments increases dramatically, and the compute requirements for processing and analyzing this data explode.

The Relion refinement pipeline is an iterative process that performs multiple iterations over the same data to find the best structure. As the total volume of data can be tens of terabytes in size, this is beyond the memory capacity of almost all current-generation computers and thus, the data must be repeatedly read from the file system. The bottleneck in application performance moves to the I/O.

A recent challenging test case produced by Cambridge research staff has a size of 20TB. The I/O time for this test case on the Cumulus traditional Lustre file system versus the new NVMe DAC reduces I/O wait times from over an hour to just a couple of minutes.

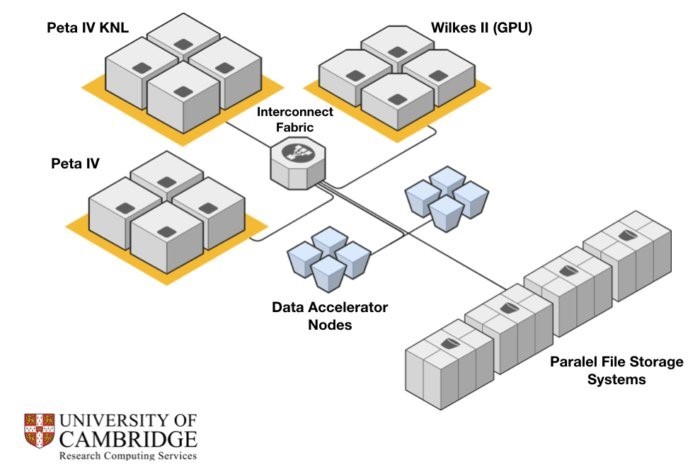

The Data Accelerator in the Cumulus supercomputer incorporates components from Dell Technologies, Intel and Cambridge University, along with an innovative orchestrator built by the University of Cambridge and StackHPC.

The Data Accelerator in the Cumulus supercomputer incorporates components from Dell Technologies, Intel and Cambridge University, along with an innovative orchestrator built by the University of Cambridge and StackHPC.

With its innovative features, DAC delivers one of the world’s fastest open‑source NVMe storage solutions. In fact, with the initial implementation of DAC, the Cumulus supercomputer at the University of Cambridge reached No. 1 in the June 2019 I/O-500 list. That means it debuted as the world’s fastest HPC storage system, nearly doubling the performance of the second‑place entry.

And here’s where this story gets even better. Today, Dell Technologies is sharing the goodness of DAC by making the solution available to the broad community of HPC users via an engineering-validated system configuration covering DAC server nodes, memory, networking, PCIe storage and NVMe storage.

Ready for a deeper dive?

- For a closer look at DAC, including system configuration details, see the Data Accelerator solution brief.

- For a detailed technical examination of the DAC architecture and development effort, see “HPC Innovation Exchange: The Data Accelerator.”

- To download the DAC software stack, visit Cambridge University GitHub page.

- Read the Dell Technologies case study with Cambridge University.