This is the fourth of a multi-part series exploring why our IT organization is aggressively transforming EMC’s corporate datacenters into Private Clouds. Previously, I described IT’s strategy shift, its newfound sense of urgency, and navigation through some “cloud fog.”

In this post we look at the unusual course EMC IT charted for its Private Cloud journey, and how the team approached selling its plan to our top execs.

Getting to “Go”

As I described in my previous post, EMC IT’s reaction to the Private Cloud concept was similar to that of many customers: a mix of relief, excitement, and questions. There were implications that would need to be worked out, but our IT folks felt confident they were already on the right path, and could proceed on their plans with some minor tweaks.

At the time, to be completely frank, I didn’t expect our IT organization to do much more than “embrace the vision.” That’s easy enough. Actually executing on it, not so much. Sure, I’d heard about IT wanting to become EMC’s “first and best” customer, but I’d heard that kind of slogan at several companies.

I knew there was a huge datacenter migration from Massachusetts to North Carolina to be done. EMC IT’s approach, however, turned out to be unusually aggressive. Instead of doing the migration first, then moving on to datacenter transformation—or vice-versa—IT decided to use the move as a forcing function, and do both simultaneously (or as close to that as possible). Furthermore, IT declared its intent to accomplish the migration without moving a single piece of hardware. The migration itself would be virtualized.

Imagine my surprise when I found out our IT had already virtualized over half of our systems, and were accelerating a march to virtualize 100% (or as close as possible) of our systems by the end of this year.

Ok, either project alone is daunting. We’re talking about an awful lot of moving parts, here. Thousands of servers. Tens of thousands of users. Hundreds of applications vital to operating a fifteen-billion-dollar company that simply must continue running without disruption.

And our IT people want to do both? At the same time? And the drop-dead, turn-in-the-keys date for the old facility is coming how soon?

At this point, you’re probably wondering, “How on Earth could a company CIO ever get senior business management buy-in for a monstrous undertaking like this?” I know I was. The approach our CIO, Sanjay Mirchandani, took was amazingly simple. The key was to make the project simple to understand, and the decisions simple to make. Doing so can’t be that easy, can it?

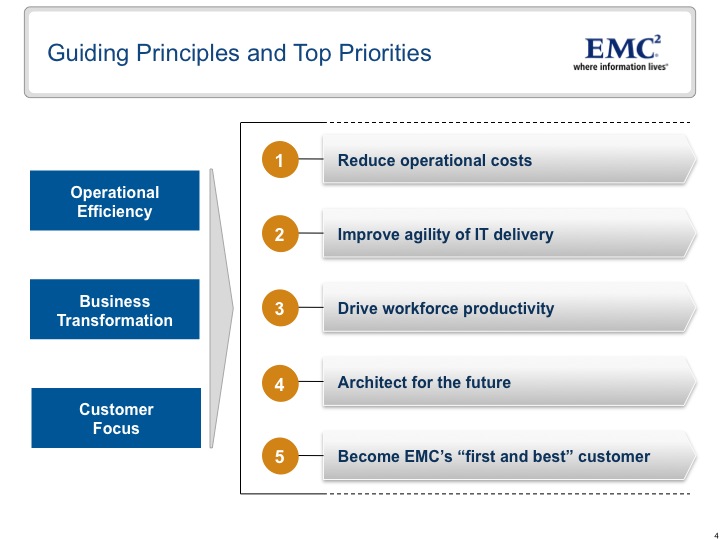

Sanjay’s first step was to lay out EMC IT’s guiding principles and priorities on a single sheet of paper. It took me over 460 words to describe them in my first post on this topic. Here’s Sanjay’s one-slide version.

Sanjay’s first step was to lay out EMC IT’s guiding principles and priorities on a single sheet of paper. It took me over 460 words to describe them in my first post on this topic. Here’s Sanjay’s one-slide version.

This list wasn’t made up out of thin air, or IT staffers’ imaginations. (No, I won’t.) It had been generated through discussions Sanjay had with his peers across the company, and with our CEO. So getting agreement on this wasn’t hard.

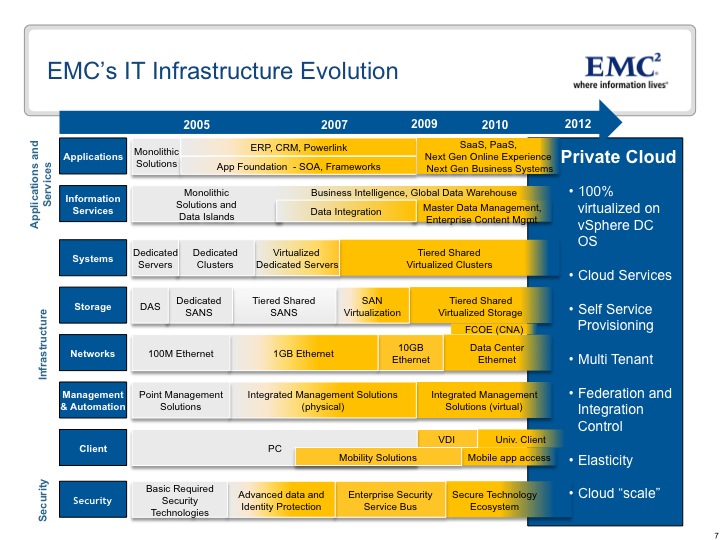

Once that was done, Sanjay needed to describe the journey—how we take all the IT stuff we have today and transform it into the environment we want tomorrow—on a single sheet of paper.

Ok, let’s be honest. IT folks, like software engineers (and I’ve been both), will readily produce big 3-ring architecture binders to describe what they’re doing. But senior business executives don’t need, or want, to understand all that. Distilling the essentials out of all those binders took some effort. With Sanjay Mirchandani’s urging, coaxing—frankly, I don’t know how he did it—his team managed to condense the roadmap for EMC IT’s technical journey down to a single page.

Here’s a recent version of the slide. Much of the history described in here is probably familiar to most of you.

Here’s a recent version of the slide. Much of the history described in here is probably familiar to most of you.

Nine years or so ago, we were running all point solutions at EMC. When users in our business units requested IT resources, they’d get server, network and storage that was dedicated to each application. It wasn’t exactly unusual for multiple group within EMC running the same application to each have its own dedicated application and resource stack, either. It’s no surprise, then, that we ended up with the kind of server, network and storage “sprawl” that has become the bane of IT administrators’ existance.

Around 2003, we started implementing Information Lifecycle Management practices in our own systems at EMC, and began consolidating our storage. We moved from dedicated SANs to tiered SANs, and then on to virtualized SANs.

Our servers went through a similar progression: applications on dedicated servers were consolidated onto server clusters, and then virtualized onto dedicated VMware clusters. More recently, we consolidated further onto shared, tiered VMware clusters.

Let’s stop here for a minute. The organization of this chart feels familiar, and seems natural, doesn’t it? Look again at how things are categorized. Most of us in IT tend to organize product types—and IT staffs—the same way. It’s been no different at EMC.

That kind of org structure made sense, and served our industry well for several years. But that same structure increasingly gets in the way of achieving the kind of delivery agility our IT folks had set as an operational priority. Consolidation and pooled resources are a good start, but they’re not enough.

We need to fundamentally change the way we operate this stuff. In other words, move away from array-centric, server-centric, and even application-centric management. Instead, we need to blur those lines and manage pooled, shared infrastructure. And we need to hide all the technological gorp from the business users running their apps on it.

Obviously, we need management tools that can span those boundaries. That should be easy; there’s a lot out there meant to do just that. But they also need to deal with the dramatically increased fluidity virtualization brings. Management products built for a static, hardware-based world just don’t cut it any more.

That’s why our IT people at EMC find Ionix so attractive. Its design center assumes IT is in a constant state of transition—a description, by the way, that really resonates for me personally. To be fair, Ionix doesn’t address everything we need yet. The good news is that our IT folks are engaged with Ionix engineers to help prioritize, test, and get needed functionality into our customers’ hands, and into EMC’s production infrastructure.

But we also have some work to do—actually a lot of work to do—on the human side. But that’s a topic for another discussion.

Ok, so we got to a single sheet of paper depicting the progression and merging of our technology silos. Our IT team next needed to outline a plan for IT to make all this happen. And, of course, it needed to be on a single sheet of paper.

I’ll describe that in my final post in this series.